This article is published in partnership with STORiES by MakeSense. Find here the original publication.

– “I never thought he could win”

– “Who the hell voted out?”

In 2016, my entourage woke up with a serious case of hangover – and so did I. After months of pro-Hilary Facebook posts, dozens of John Oliver video shares and all my feeds flooded with Emma Watson’s speech on her hope in the next female president, I woke up with a strange feeling of having missed some key memo. How the hell did this happen? Who the hell voted out? Were my friends and I so disconnected from reality? As avid news readers, how could have we been so wrong all this time? It became clear that we had missed a crucial part of the population’s voice – or at least, had greatly underestimated its volume. Since our biggest sources of information were now held online, we turned to our beloved tech giants and wondered – was it possible that, in the vast land of the web, we got confined in a small bubble?

The tale of Internet – But I thought you promised…

Back in 1967, the only way for people to stay informed was through their inner circle (close friends, family, neighbors), TV, and national newspapers. In the 1960s, only three national media sources were available in the US (CBS, NBC and ABC), with few differences in content across the three networks.

Julien Assange de Wikileaks

But in the 1980s, all of that changed thanks to a couple of geeky blokes with a crazy dream. One of the great missions of Internet was to make all of the world’s information available to anyone with an Internet connection. Activists such as Aaron Swartz pushed for a fully transparent web, where all scientific papers and all books could be available to everyone. In the hackers’ philosophy, because we make better decisions when we have all the details about a specific situation, the same should be valid on a larger scale: society should benefit from full transparency – so that we could collectively be more intelligent and able to make good decisions. The Internet was the promise for a full, well-rounded opinion on every topic – with the possibility to compare views and really get the full story. And so, in the spam of 30 years, over 1 billion websites were created, newspapers switched to online-first models, and blogging companies such as Tumblr now host over 340 million blogs from all over the world.

You read what you think

Fast forward to today, the whole world’s information is available to all online. However, its access is a lot more restricted, the main cause being interfaces: when designing the biggest library ever created, the key challenge was to design its doors. Through websites’ evolution, two main interfaces got the most attention: search-based results (e.g. Google) and feeds (e.g. Facebook, Twitter), both powered by massive machine learning algorithms, with the aim to provide content that would suit our likes and interests as much as possible.

With search-based platforms, acquiring a specific piece of information starts with phrasing a request. And it is the search engines’ job to present content that fits our query at best. However, it is not part of their tasks to inform us when the nature of our request is wrong, or incomplete.

If one searches for “Trump’s fortune” – the top results will show valuations of the president’s total wealth and assets.

However, you will need to look further to find out the nuances about that request: how much money he is currently losing/winning, where does his fortune come from, and how many times he has been subject to bankruptcy.

But by slightly changing the initial request, for “Trump’s actual fortune” for example, results will completely change and include detailed articles about the dynamics of his wealth.

Hence, the way we structure our query impacts the information we receive, sometimes eluding critical details that would have allowed us to not only have an answer to our request, but all the nuances that should come with it. In high school, when you asked your history teacher about a specific question, he’d never just answer – he’d add context and weight his answer when giving it to you. That is where Google still falls shorts today.

I know you better than you do

On the other type of platform, content (articles, ads, videos) is pushed to us through newsfeeds. But with over 2 billion users on its platform, Facebook has to cater its content to each one of us to stay attractive. For that, the company uses powerful machine learning algorithms to understand our interests and show us content that is relevant for us. That’s cool – same way that if you’re sitting next to a 17-year-old with a Sex Pistols tee-shirt at a wedding, you’ll probably try and start a conversation by talking about your favourite punk band rather than the financial drop in Asian markets.

The interesting thing is that machine learning algorithms are much better than humans at using those types of clues to understand whom a person is, and what she likes.

Researchers, such as Michal Kosinski, showed that by simply having access to the Facebook pages liked by someone, it is possible to predict her personality with much better accuracy than if she was taking a personality test herself.

While this research can have positive impacts for marketing purposes – it can also be risky when between biased hands. A few years ago, a start-up called Cambridge Analytica started using the above research to profile every single American Facebook user and provide services for political campaigns. Working for the leave campaign in the United Kingdom and Trump’s candidacy, they could adapt the content pushed to each user depending on his psychological profile – a rather introvert and family-oriented person, or an open, extravert one will receive different articles supporting Trump’s platform, so that they could suit their values. Politicians could go from a single, national slogan to an individual argument for each American using Facebook (that is, 79% of online adults).

Cambridge Analytica analysing

This also means that most of the content pushed to us online is targeted to our values and opinions, and never contradict them. This effect, mostly defined as the “media echo chamber”, suggests that Internet’s promise is broken – and that we only interact with ideas within our bubble, missing the whole picture and opposing arguments. Indeed, polls showed that 76% of Americans say they usually turn to the same sources for news, and according to Pew Research, 61% of millennials use Facebook as their primary source of news about politics and government. A different study, led by Demos, found that supporters of extremist parties were even less likely to engage with people holding different beliefs.

Slow down with the claustrophobia: the bubble counter-argument

Now, should we boycott internet as the digital jail locking us away from context? Perhaps we should not be too pessimistic. If we look back fifty years ago, when there was no internet at all – was our bubble larger than now? All of the studies on the echo-chamber effect looked at the diversity of information accessed online, but they did not compare it with a case where information would only be obtained offline. If we were to switch to physical newspapers again – would we really consult more diverse sources and opinions? Sure, our vision is not fully complete – but has it really shrunken? Well, perhaps not.

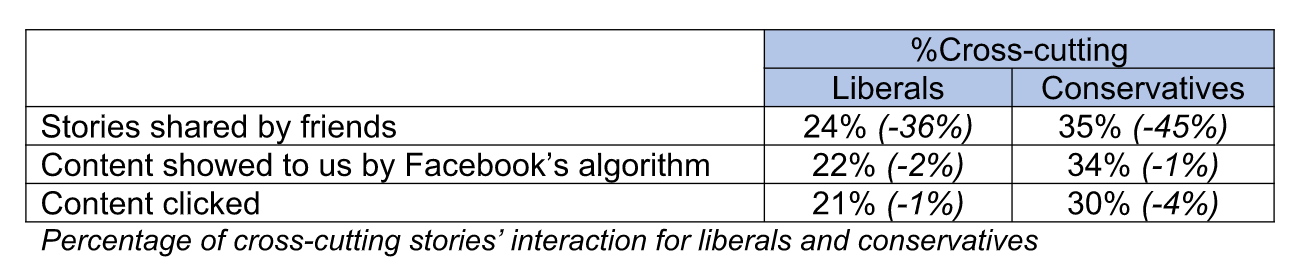

Researchers at Facebook studied the newsfeeds of 10.1 million active Facebook users in the US and looked at three factors contributing to the echo chamber effect: first, who our friends are and what content they share; second, among the new stories shared by our friends, which ones are displayed to us by the algorithm; and lastly which of the displayed articles we actually click on. The researchers found that 24% of news stories shared by liberals’ friends were cross-cutting and 35% of stories shared by conservatives’ friends were. This is simply because we tend to be friends with people who share our opinions, and hence our friends are more likely to share content that supports our view. Researchers then found that algorithms further increased the tunnel, but to a much lower extent: because algorithms are based on our previous clicks, they reduced the proportion of cross-cutting news stories to 22% for liberals and 34% for conservatives. Finally, they found that liberals clicked on 21% of cross-cutting news stories and conservatives on 30%.

Percentage of cross-cutting stories’ interaction for liberals and conservatives / (-X%): percentage of cross-cutting stories “lost”

We, naturally, engage more with content that reinforce our existing beliefs rather than challenge them.

In the end, social media’s algorithm is therefore only partially responsible for the echo chamber effect: the fact that we tend to share the same political views than our friends and families, and that we prefer to engage with content that supports our existing opinion (also called “confirmation bias”), is the main responsible for our narrowed view, and is far from new. The same thing happened when adults tend to share the same beliefs than their neighbors, friends, or local news.

What can we do about it?

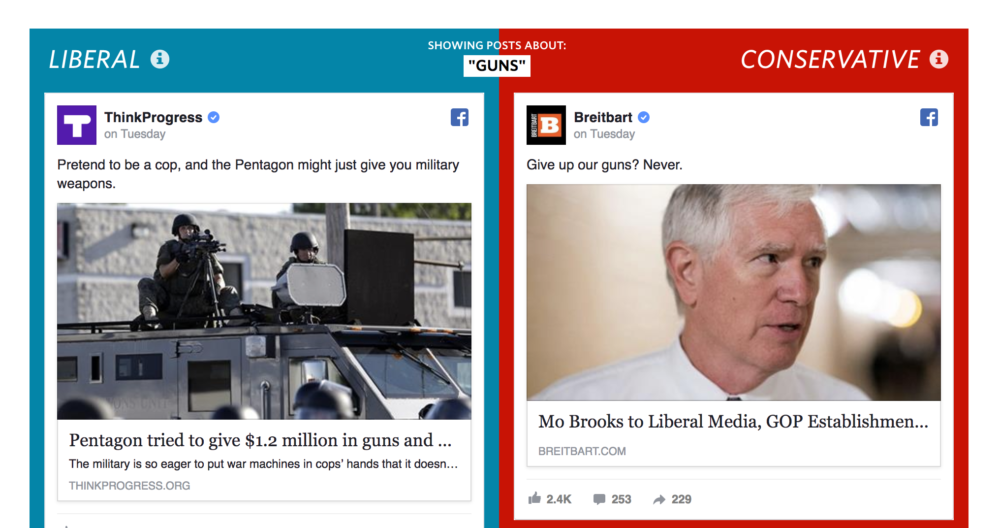

It is crucial that when navigating information online, we take a step back and wonder if the opinion we have is the only one – and to what extent it is shared on a national or global level. At best, one could voluntarily follow a media source with a contrasting political sight. It is also the role of content providers to give readers not only what they want to hear, but all they should hear. This trend is giving rise to new media sources, such as Le Drenche in France, which cover news topics by opposing two different arguments, so that readers can make their opinion with a full understanding of the implications. The Wall Street Journal has also created a website that simulates and contrasts two Facebook feeds (one liberal, and one conservative) on a given topic.

Blue feed, red feed : the Wall Street Journal simulation is quite scary…

In order to stay relevant, social media algorithms need to bring up content that we’ll love: their whole business model is at stake. But while it is true that Facebook will hardly suggest you news stories opposed to your opinion, it is unlikely to increase your bias. The social network has actually been repeatedly pointed at in the past months, and has been taking actions to try and reduce the so-called “eco-chamber” effect. Recently, it has announced it will increase the number of media sources suggested to you: for each article presented on your newsfeed, Facebook will start adding “related” articles from different publications, to allow for more variety.

And if one does want to broaden his perspective further, the whole internet is always available to search.

“Perhaps our bubble is not smaller: our world has just become so vast that we can now see it” (Kosinski).

Sources

Cheshire, T. (2017). Social media ‘echo chamber’ causing political tunnel vision, study finds. Sky News.

Copeland, D. (2010). The Media’s Role in Defining the Nation: The Active Voice. New York: Peter Lang Publishing, Inc.

El-Bermawy, M.M. (2016). Your Filter Bubble is Destroying Democracy. Wired.

Gottfried, J., Barthel, M., Shearer, E. and Mitchell, A. (2016). The 2016 Presidential Campaign – a News Event That’s Hard to Miss. Pew Research Center.

Greenwood, S., Perrin, A. and Duggan, M. (2016). Social Media Update. Pew Research Center.

Hosanagar, K. (2016). Blame The Echo Chamber On Facebook. But Blame Yourself, Too. Wired.

Mitchell, A., Gottfried J., Barthel, M. and Shearer, E. (2016). The Modern News Consumer. Pew Research Center,

Mitchell, A., Gottfried, J. and Matsa, K.E. (2015). Facebook Top Source for Political News Among Millennials. Pew Research Center,

Statistica (2017). Cumulative total of Tumblr blogs from May 2011 to April 2017 (in millions)

Wagner, K. (2017). Facebook Will Suggest More Articles For You To Read in News Feed To Help Fight Its ‘Filter Bubble’. Recode.

This article is published in partnership with STORiES by MakeSense. Find here the original publication.